Redis学习笔记一

# Redis 安装、启动与停止

# 解压文件

# -z或--gzip或--ungzip 通过gzip指令处理备份文件。

# -x或--extract或--get 从备份文件中还原文件。

# -v或--verbose 显示指令执行过程。

# -f<备份文件>或--file=<备份文件> 指定备份文件。

tar -zxvf redis-6.0.9.tar.gz

# 进入Redis解压目录

cd redis-6.0.9

# 编译

make

# 如果出现 “cc:命令未找到” 报错,需要安装gcc

# Centos中使用yum来安装gcc(这种方式需要能上网)

yum -y install gcc

# 若要安装g++,则输入(这种方式需要能上网)

yum -y install gcc-c++

# 如果出现 “zmalloc.h:50:31: 致命错误:jemalloc/jemalloc.h:没有那个文件或目录” 报错

make MALLOC=libc

# 继续报错,server.c:5343:176: 错误:‘struct redisServer’没有名为‘maxmemory’的成员

# 参考https://blog.csdn.net/Mr_FenKuan/article/details/111072880

yum install centos-release-scl -y

yum install devtoolset-9-gcc

scl enable devtoolset-9 bash

# 安装

make install

# 查看Redis安装位置

whereis redis-cli

whereis redis-server

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

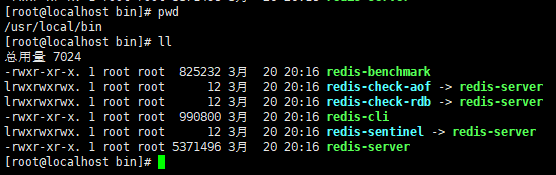

| 文件 | 说明 |

|---|---|

| redis-server | Redis服务器 |

| redis-cli | Redis客户端 |

| redis-benchmark | Redis性能测试 |

| redis-sentinel | Redis哨兵,用于主从复制、分片集群,保证高可用 |

| redis-check-rdb | 检查RDB文件 |

| redis-check-aof | 检查AOF文件 |

# 指定配置文件启动Redis服务器

/usr/local/bin/redis-server /opt/redis/redis.conf

2

# 指定某端口号启动Redis客户端

/usr/local/bin/redis-cli -p 6379

2

# 测试连接

127.0.0.1:6379> ping

PONG

2

3

# 停止Redis服务

127.0.0.1:6379> shutdown

# 退出Redis客户端

not connected> exit

[root@localhost redis]# ps -ef|grep redis

root 12324 10325 0 20:41 pts/1 00:00:00 grep --color=auto redis

2

3

4

5

6

# 测试性能

redis-benchmark -h 127.0.0.1 -p 6379

2

# Redis 基本操作

# Redis有16个数据库,默认0号库,可以使用select切换库

127.0.0.1:6379> select 1

OK

127.0.0.1:6379[1]>

# 返回当前数据库中 key 的数量

dbsize

# 查找所有符合给定模式 的 key

keys *

# 检查给定 key 是否存在

exists key

# 删除给定的一个或多个 key

del key

# 设置过期时间

expire key seconds

# 查看存活时间,即还有多少秒过期

ttl key

# 以字符串的形式返回存储在 key 中的值的类型

type key

# 清空当前库

flushdb

# 清空所有库

flushall

# config get 配置项,查看配置信息

127.0.0.1:6379> config get port

1) "port"

2) "6379"

127.0.0.1:6379> config get dir

1) "dir"

2) "/opt/redis"

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

# Redis 数据类型

# 基本数据类型

# String(字符串)

# 将键 key 的值设置为 value,如果 key 已存在,则覆盖已有值

set key value

# 将键 key 的值设置为 value,并将键 key 的生存时间设置为 seconds 秒,如果 key 已存在,则覆盖已有值

setex key seconds value

# 如果 key 不存在,则将键 key 的值设置为 value,否则什么都不做

setnx key value

# 获取

get key

# 将键 key 的值设为 value , 并返回键 key 在被设置之前的旧值

getset key value

# 批量设置

mset key value [key value]

# 批量获取

mget key [key]

# 自增1

incr key

# 增加指定步长

incrby key increment

# 自减1

decr key

# 减去指定步长

decrby key decrement

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

# List(双向列表)

# lpush key element [element ...] 将一个或多个值依次插入到键为key的列表的头部

127.0.0.1:6379> lpush list 3 2 1

(integer) 3

# rpush key element [element ...] 将一个或多个值依次插入到键为key的列表的底部

127.0.0.1:6379> rpush list 4 5 6

(integer) 6

# lrange key start stop 返回列表中指定区间内的元素,-1表示最后一个元素

127.0.0.1:6379> lrange list 0 -1

1) "1"

2) "2"

3) "3"

4) "4"

5) "5"

6) "6"

# llen key 返回列表元素个数

127.0.0.1:6379> llen list

(integer) 6

# lpop key [count] 用于删除并返回列表头部的元素

127.0.0.1:6379> lpop list

"1"

127.0.0.1:6379> lrange list 0 -1

1) "2"

2) "3"

3) "4"

4) "5"

5) "6"

127.0.0.1:6379> lpop list 2

1) "2"

2) "3"

127.0.0.1:6379> lrange list 0 -1

1) "4"

2) "5"

3) "6"

# rpop key [count] 用于删除并返回列表底部的元素

127.0.0.1:6379> rpop list

"6"

127.0.0.1:6379> lrange list 0 -1

1) "1"

2) "2"

3) "3"

4) "4"

5) "5"

127.0.0.1:6379> rpop list 2

1) "5"

2) "4"

127.0.0.1:6379> lrange list 0 -1

1) "1"

2) "2"

3) "3"

# linsert key BEFORE|AFTER pivot element 在指定元素前或后插入新元素

# lindex key index 获取指定索引的元素

# lpos key element [RANK rank] [COUNT num-matches] [MAXLEN len] 获取指定元素的索引

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

# Set(无序集合)

# 添加

sadd key member [member ...]

# 随机删除成员并返回被删除的成员

spop key [count]

# 返回集合中所有成员

smembers key

# 返回集合中随机成员

srandmember key [count]

# 判断成员是否存在

sismember key member

# 返回集合中元素个数

scard key

# 差集,返回第一个集合与其他集合之间的差异,也可以认为说第一个集合中独有的元素

sdiff key [key ...]

# 交集

sinter key [key ...]

# 并集

sunion key [key ...]

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

# Zset(有序集合)

# 添加

zadd key [NX|XX] [GT|LT] [CH] [INCR] score member [score member ...]

# 删除并返回得分最高的元素

zpopmax key [count]

# 删除并返回得分最低的元素

zpopmin key [count]

# 返回指定区间中的元素,-1表示最后一个元素

zrange key min max [BYSCORE|BYLEX] [REV] [LIMIT offset count] [WITHSCORES]

# 返回集合中元素个数

zcard key

2

3

4

5

6

7

8

9

10

11

12

# Hash(哈希表)

# 创建哈希表并赋值或者覆盖值

hset key field value [field value ...]

# 返回哈希表中指定字段 field 的值

hget key field

hmset key field value [field value ...]

hmget key field [field ...]

# 返回存储在 key 中哈希表的所有域

hkeys key

# 返回存储在 key 中哈希表的所有值

hvals key

# 返回哈希表中所有域和值

hgetall

# 删除返回哈希表中指定域

hdel key field [field ...]

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

# 特殊数据类型

# HyperLogLog

HyperLogLog 是用来做基数统计的算法。每个 HyperLogLog 键只需要花费 12 KB 内存,就可以计算接近 264 个不同元素的基数。

比如数据集 {1, 3, 5, 7, 5, 7, 8}, 那么这个数据集的基数集为 {1, 3, 5 ,7, 8}, 基数(不重复元素)为5。 基数估计就是在误差可接受的范围内,快速计算基数。

# 添加指定元素到 HyperLogLog 中

pfadd key element [element ...]

# 返回给定 HyperLogLog 的基数估算值

pfcount key [key ...]

# 将多个 HyperLogLog 合并为一个 HyperLogLog

pfmerge destkey sourcekey [sourcekey ...]

2

3

4

5

6

7

8

# GEO

GEO 主要用于存储地理位置信息,并对存储的信息进行操作,该功能在 Redis 3.2 版本新增。

# 添加地理位置的坐标

geoadd key [NX|XX] [CH] longitude latitude member [longitude latitude member ...]

# 获取地理位置的坐标

geopos key member [member ...]

# 计算两个位置之间的距离

geodist key member1 member2 [m|km|ft|mi]

# 根据用户给定的经纬度坐标来获取指定范围内的地理位置集合

georadius key longitude latitude radius m|km|ft|mi [WITHCOORD] [WITHDIST] [WITHHASH] [COUNT count [ANY]] [ASC|DESC] [STORE key] [STOREDIST key]

127.0.0.1:6379> geoadd city 116.23128 40.22077 beijing 121.48941 31.40527 shanghai 113.27324 23.15792 guangzhou 113.88308 22.55329 shenzhen

(integer) 4

127.0.0.1:6379> geopos city shenzhen

1) 1) "113.88307839632034302"

2) "22.55329111565713873"

127.0.0.1:6379> geodist city guangzhou shenzhen km

"91.8118"

127.0.0.1:6379> georadius city 100 50 100 km

(empty array)

127.0.0.1:6379> georadius city 100 50 1000 km

(empty array)

127.0.0.1:6379> georadius city 100 50 10000 km

1) "shenzhen"

2) "guangzhou"

3) "shanghai"

4) "beijing"

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

# BitMap

位图

# Redis 事务操作

Redis的事务不是严格意义上的事务,单个命令执行是原子性的。

一个事务从开始到执行会经历以下三个阶段:

- 开启事务 multi

- 命令入队

- 执行事务 exec / 取消事务 discard

multi、exec、discard、watch 这四个指令构成了 Redis 事务处理的基础。

- multi 用来组装一个事务;

- exec 用来执行一个事务;

- discard 用来取消一个事务;

- watch 监视一个(或多个) key,一旦这些 key 在事务执行之前被改变,则取消事务的执行;

- unwatch 取消 watch 命令对所有 key 的监视。

正常情况

# 开启事务

127.0.0.1:6379> multi

OK

# 命令入队

127.0.0.1:6379(TX)> set k1 v1

QUEUED

127.0.0.1:6379(TX)> set k2 v2

QUEUED

127.0.0.1:6379(TX)> get k1

QUEUED

127.0.0.1:6379(TX)> get k2

QUEUED

127.0.0.1:6379(TX)> set k3 v3

QUEUED

# 执行事务

127.0.0.1:6379(TX)> exec

1) OK

2) OK

3) "v1"

4) "v2"

5) OK

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

编译时异常(语法错误,命令都不执行)

127.0.0.1:6379> multi

OK

127.0.0.1:6379(TX)> set k1 v1

QUEUED

127.0.0.1:6379(TX)> set k2 v2

QUEUED

127.0.0.1:6379(TX)> suibianshuru666

(error) ERR unknown command `suibianshuru666`, with args beginning with:

127.0.0.1:6379(TX)> set k3 v3

QUEUED

127.0.0.1:6379(TX)> exec

(error) EXECABORT Transaction discarded because of previous errors.

2

3

4

5

6

7

8

9

10

11

12

13

运行时异常(其他错误,命令部分执行)

127.0.0.1:6379> multi

OK

127.0.0.1:6379(TX)> set k1 v1

QUEUED

127.0.0.1:6379(TX)> set k2 v2

QUEUED

127.0.0.1:6379(TX)> incr k1

QUEUED

127.0.0.1:6379(TX)> set k3 v3

QUEUED

127.0.0.1:6379(TX)> exec

1) OK

2) OK

3) (error) ERR value is not an integer or out of range

4) OK

2

3

4

5

6

7

8

9

10

11

12

13

14

15

乐观锁

127.0.0.1:6379> watch balance # 监听余额

OK

127.0.0.1:6379> multi

OK

127.0.0.1:6379(TX)> decrby balance 10 # 扣减余额

QUEUED

127.0.0.1:6379(TX)> incrby paymeng 10 # 新增支出

QUEUED

127.0.0.1:6379(TX)> exec # 执行事务,如果在执行事务之前balance被修改,则事务执行失败

1) (integer) 9990

2) (integer) 10

2

3

4

5

6

7

8

9

10

11

# Redis 发布订阅

# 订阅一个或多个频道的信息

subscribe channel [channel ...]

# 将信息发送到指定频道

publish channel message

# 退订一个或多个频道

unsubscribe [channel [channel ...]]

2

3

4

5

6

7

8

# Redis 配置文件

################################# GENERAL #####################################

# By default Redis does not run as a daemon. Use 'yes' if you need it.

# Note that Redis will write a pid file in /var/run/redis.pid when daemonized.

# When Redis is supervised by upstart or systemd, this parameter has no impact.

daemonize yes # 将no改为yes

2

3

4

5

# Redis 持久化

Redis 提供了两种持久化的方式,分别是RDB(Redis DataBase)和AOF(Append Only File)。

- 默认开启RDB,关闭AOF;

- 如果RDB和AOF同时存在,则优先采用AOF方式,这是因为AOF方式的数据恢复完整度更高;

- 不管是RDB还是AOF,主线程负责接收客户端请求进行读写操作,新开启的线程负责生成RDB或AOF文件。

# RDB(Redis DataBase)

RDB,在一定的时间窗口内进行了指定次数的写操作,则将Redis存储的数据生成快照并存储到磁盘等介质中。

################################ SNAPSHOTTING ################################

# Save the DB to disk.

#

# save <seconds> <changes>

#

# Redis will save the DB if both the given number of seconds and the given

# number of write operations against the DB occurred.

#

# Snapshotting can be completely disabled with a single empty string argument

# as in following example:

#

# save "" # 禁用RDB

#

# Unless specified otherwise, by default Redis will save the DB:

# * After 3600 seconds (an hour) if at least 1 key changed

# * After 300 seconds (5 minutes) if at least 100 keys changed

# * After 60 seconds if at least 10000 keys changed

#

# You can set these explicitly by uncommenting the three following lines.

#

# save 3600 1

# save 300 100

# save 60 10000

# The filename where to dump the DB

dbfilename dump.rdb

# The working directory.

#

# The DB will be written inside this directory, with the filename specified

# above using the 'dbfilename' configuration directive.

#

# The Append Only File will also be created inside this directory.

#

# Note that you must specify a directory here, not a file name.

dir ./

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

dump.rdb在以下情况时会生成:

- 满足配置文件的条件,在一定的时间窗口内进行了指定次数的写操作;

# Unless specified otherwise, by default Redis will save the DB:

# * After 3600 seconds (an hour) if at least 1 key changed

# * After 300 seconds (5 minutes) if at least 100 keys changed

# * After 60 seconds if at least 10000 keys changed

#

# You can set these explicitly by uncommenting the three following lines.

#

# save 3600 1

# save 300 100

# save 60 10000

2

3

4

5

6

7

8

9

10

执行

save命令;执行

flushdb命令;执行

flushall命令;执行

shutdown命令。

# AOF(Append Only File)

AOF,是将在Redis中执行的所有写指令记录下来,在下次Redis服务启动的时候,将这些写指令从前到后执行一遍,实现数据恢复。

############################## APPEND ONLY MODE ###############################

# By default Redis asynchronously dumps the dataset on disk. This mode is

# good enough in many applications, but an issue with the Redis process or

# a power outage may result into a few minutes of writes lost (depending on

# the configured save points).

#

# The Append Only File is an alternative persistence mode that provides

# much better durability. For instance using the default data fsync policy

# (see later in the config file) Redis can lose just one second of writes in a

# dramatic event like a server power outage, or a single write if something

# wrong with the Redis process itself happens, but the operating system is

# still running correctly.

#

# AOF and RDB persistence can be enabled at the same time without problems.

# If the AOF is enabled on startup Redis will load the AOF, that is the file

# with the better durability guarantees.

#

# Please check http://redis.io/topics/persistence for more information.

appendonly no # 是否开启AOF方式

# The name of the append only file (default: "appendonly.aof")

appendfilename "appendonly.aof"

# The fsync() call tells the Operating System to actually write data on disk

# instead of waiting for more data in the output buffer. Some OS will really flush

# data on disk, some other OS will just try to do it ASAP.

#

# Redis supports three different modes:

#

# no: don't fsync, just let the OS flush the data when it wants. Faster.

# always: fsync after every write to the append only log. Slow, Safest.

# everysec: fsync only one time every second. Compromise.

#

# The default is "everysec", as that's usually the right compromise between

# speed and data safety. It's up to you to understand if you can relax this to

# "no" that will let the operating system flush the output buffer when

# it wants, for better performances (but if you can live with the idea of

# some data loss consider the default persistence mode that's snapshotting),

# or on the contrary, use "always" that's very slow but a bit safer than

# everysec.

#

# More details please check the following article:

# http://antirez.com/post/redis-persistence-demystified.html

#

# If unsure, use "everysec".

# appendfsync always

appendfsync everysec

# appendfsync no

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

# Redis 主从复制

测试主从复制:同一机器创建三个配置文件,启动三个不同端口号的Redis服务,并分别使用三个客户端连接。

配置文件如下:

redis_6379.conf

# 公用配置

include /opt/redis/redis.conf

# 个性化配置

port 6379

pidfile /var/run/redis_6379.pid

logfile "redis_6379.log"

dbfilename dump_6379.rdb

2

3

4

5

6

7

8

redis_6380.conf

# 公用配置

include /opt/redis/redis.conf

# 个性化配置

port 6380

pidfile /var/run/redis_6380.pid

logfile "redis_6380.log"

dbfilename dump_6380.rdb

2

3

4

5

6

7

8

redis_6381.conf

# 公用配置

include /opt/redis/redis.conf

# 个性化配置

port 6381

pidfile /var/run/redis_6381.pid

logfile "redis_6381.log"

dbfilename dump_6381.rdb

2

3

4

5

6

7

8

启动服务,连接客户端

# 启动服务器

/usr/local/bin/redis-server /opt/redis/redis_6379.conf

/usr/local/bin/redis-server /opt/redis/redis_6380.conf

/usr/local/bin/redis-server /opt/redis/redis_6381.conf

# 启动客户端

/usr/local/bin/redis-cli -p 6379

/usr/local/bin/redis-cli -p 6380

/usr/local/bin/redis-cli -p 6381

2

3

4

5

6

7

8

9

使用 info replication 查看主从配置,Redis服务器启动默认是master节点。

127.0.0.1:6379> info replication

# Replication

role:master

connected_slaves:0

master_failover_state:no-failover

master_replid:d8ddf17b411a6e03263cf8f870bd1c2b5fd6e732

master_replid2:0000000000000000000000000000000000000000

master_repl_offset:0

second_repl_offset:-1

repl_backlog_active:0

repl_backlog_size:1048576

repl_backlog_first_byte_offset:0

repl_backlog_histlen:0

2

3

4

5

6

7

8

9

10

11

12

13

使用 slaveof host port 手动绑定主从,或者在配置文件中配置 replicaof <masterip> <masterport>

127.0.0.1:6380> slaveof 127.0.0.1 6379

OK

127.0.0.1:6380> info replication

# Replication

role:slave

master_host:127.0.0.1

master_port:6379

master_link_status:up

master_last_io_seconds_ago:10

master_sync_in_progress:0

slave_repl_offset:112

slave_priority:100

slave_read_only:1

connected_slaves:0

master_failover_state:no-failover

master_replid:02496d2e289dd49a98a6f8dde2c153fb1587ba6e

master_replid2:0000000000000000000000000000000000000000

master_repl_offset:112

second_repl_offset:-1

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:1

repl_backlog_histlen:112

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

127.0.0.1:6379> info replication

# Replication

role:master

connected_slaves:2

slave0:ip=127.0.0.1,port=6380,state=online,offset=126,lag=1

slave1:ip=127.0.0.1,port=6381,state=online,offset=126,lag=1

master_failover_state:no-failover

master_replid:02496d2e289dd49a98a6f8dde2c153fb1587ba6e

master_replid2:0000000000000000000000000000000000000000

master_repl_offset:126

second_repl_offset:-1

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:1

repl_backlog_histlen:126

2

3

4

5

6

7

8

9

10

11

12

13

14

15

使用 slaveof no one 解绑主从

127.0.0.1:6381> slaveof no one

OK

127.0.0.1:6381> info replication

# Replication

role:master

connected_slaves:0

master_failover_state:no-failover

master_replid:954afb72e238ca2fe9b2e84009d52585207ab7c3

master_replid2:02496d2e289dd49a98a6f8dde2c153fb1587ba6e

master_repl_offset:361

second_repl_offset:362

repl_backlog_active:1

repl_backlog_size:1048576

repl_backlog_first_byte_offset:29

repl_backlog_histlen:333

2

3

4

5

6

7

8

9

10

11

12

13

14

15

使用 slaveof host port 绑定主从,会有两个问题:

- 主服务器宕机,从服务器群龙无首;

- 从服务器断开,重新连接时,需要手动执行slaveof绑定主从。

为了解决以上问题,引用哨兵模式。

哨兵模式,是从服务器经过投票选举,票数高的自动提升为主服务器。

sentinel.conf

# By default Redis Sentinel does not run as a daemon. Use 'yes' if you need it.

# Note that Redis will write a pid file in /var/run/redis-sentinel.pid when

# daemonized.

daemonize yes # 以守护进程启动哨兵

# sentinel monitor <master-name> <ip> <redis-port> <quorum>

#

# Tells Sentinel to monitor this master, and to consider it in O_DOWN

# (Objectively Down) state only if at least <quorum> sentinels agree.

#

# Note that whatever is the ODOWN quorum, a Sentinel will require to

# be elected by the majority of the known Sentinels in order to

# start a failover, so no failover can be performed in minority.

#

# Replicas are auto-discovered, so you don't need to specify replicas in

# any way. Sentinel itself will rewrite this configuration file adding

# the replicas using additional configuration options.

# Also note that the configuration file is rewritten when a

# replica is promoted to master.

#

# Note: master name should not include special characters or spaces.

# The valid charset is A-z 0-9 and the three characters ".-_".

sentinel monitor mymaster 127.0.0.1 6379 1 # 1表示执行故障恢复操作前至少需要几个哨兵节点同意。因为只有两个从节点,因此这个数值(quorum)只能设置为1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

启动哨兵

/usr/local/bin/redis-sentinel /opt/redis/sentinel.conf

关掉6379,查看哨兵日志

[root@localhost redis]# /usr/local/bin/redis-sentinel /opt/redis/sentinel.conf

6189:X 21 Mar 2021 12:04:21.330 # oO0OoO0OoO0Oo Redis is starting oO0OoO0OoO0Oo

6189:X 21 Mar 2021 12:04:21.331 # Redis version=6.2.1, bits=64, commit=00000000, modified=0, pid=6189, just started

6189:X 21 Mar 2021 12:04:21.331 # Configuration loaded

6189:X 21 Mar 2021 12:04:21.331 * Increased maximum number of open files to 10032 (it was originally set to 1024).

6189:X 21 Mar 2021 12:04:21.331 * monotonic clock: POSIX clock_gettime

_._

_.-``__ ''-._

_.-`` `. `_. ''-._ Redis 6.2.1 (00000000/0) 64 bit

.-`` .-```. ```\/ _.,_ ''-._

( ' , .-` | `, ) Running in sentinel mode

|`-._`-...-` __...-.``-._|'` _.-'| Port: 26379

| `-._ `._ / _.-' | PID: 6189

`-._ `-._ `-./ _.-' _.-'

|`-._`-._ `-.__.-' _.-'_.-'|

| `-._`-._ _.-'_.-' | http://redis.io

`-._ `-._`-.__.-'_.-' _.-'

|`-._`-._ `-.__.-' _.-'_.-'|

| `-._`-._ _.-'_.-' |

`-._ `-._`-.__.-'_.-' _.-'

`-._ `-.__.-' _.-'

`-._ _.-'

`-.__.-'

6189:X 21 Mar 2021 12:04:21.332 # WARNING: The TCP backlog setting of 511 cannot be enforced because /proc/sys/net/core/somaxconn is set to the lower value of 128.

6189:X 21 Mar 2021 12:04:21.334 # Sentinel ID is 2fecb2124682385875472f734a46504ae74760fa

6189:X 21 Mar 2021 12:04:21.334 # +monitor master mymaster 127.0.0.1 6379 quorum 1

6189:X 21 Mar 2021 12:04:21.335 * +slave slave 127.0.0.1:6380 127.0.0.1 6380 @ mymaster 127.0.0.1 6379

6189:X 21 Mar 2021 12:04:21.337 * +slave slave 127.0.0.1:6381 127.0.0.1 6381 @ mymaster 127.0.0.1 6379

6189:X 21 Mar 2021 12:05:14.936 # +sdown master mymaster 127.0.0.1 6379

6189:X 21 Mar 2021 12:05:14.936 # +odown master mymaster 127.0.0.1 6379 #quorum 1/1

6189:X 21 Mar 2021 12:05:14.936 # +new-epoch 1

6189:X 21 Mar 2021 12:05:14.936 # +try-failover master mymaster 127.0.0.1 6379

6189:X 21 Mar 2021 12:05:14.941 # +vote-for-leader 2fecb2124682385875472f734a46504ae74760fa 1

6189:X 21 Mar 2021 12:05:14.941 # +elected-leader master mymaster 127.0.0.1 6379

6189:X 21 Mar 2021 12:05:14.941 # +failover-state-select-slave master mymaster 127.0.0.1 6379

6189:X 21 Mar 2021 12:05:15.018 # +selected-slave slave 127.0.0.1:6380 127.0.0.1 6380 @ mymaster 127.0.0.1 6379

6189:X 21 Mar 2021 12:05:15.018 * +failover-state-send-slaveof-noone slave 127.0.0.1:6380 127.0.0.1 6380 @ mymaster 127.0.0.1 6379

6189:X 21 Mar 2021 12:05:15.118 * +failover-state-wait-promotion slave 127.0.0.1:6380 127.0.0.1 6380 @ mymaster 127.0.0.1 6379

6189:X 21 Mar 2021 12:05:15.331 # +promoted-slave slave 127.0.0.1:6380 127.0.0.1 6380 @ mymaster 127.0.0.1 6379

6189:X 21 Mar 2021 12:05:15.331 # +failover-state-reconf-slaves master mymaster 127.0.0.1 6379

6189:X 21 Mar 2021 12:05:15.361 * +slave-reconf-sent slave 127.0.0.1:6381 127.0.0.1 6381 @ mymaster 127.0.0.1 6379

6189:X 21 Mar 2021 12:05:16.325 * +slave-reconf-inprog slave 127.0.0.1:6381 127.0.0.1 6381 @ mymaster 127.0.0.1 6379

6189:X 21 Mar 2021 12:05:16.325 * +slave-reconf-done slave 127.0.0.1:6381 127.0.0.1 6381 @ mymaster 127.0.0.1 6379

6189:X 21 Mar 2021 12:05:16.425 # +failover-end master mymaster 127.0.0.1 6379

6189:X 21 Mar 2021 12:05:16.425 # +switch-master mymaster 127.0.0.1 6379 127.0.0.1 6380

6189:X 21 Mar 2021 12:05:16.425 * +slave slave 127.0.0.1:6381 127.0.0.1 6381 @ mymaster 127.0.0.1 6380

6189:X 21 Mar 2021 12:05:16.425 * +slave slave 127.0.0.1:6379 127.0.0.1 6379 @ mymaster 127.0.0.1 6380

6189:X 21 Mar 2021 12:05:46.468 # +sdown slave 127.0.0.1:6379 127.0.0.1 6379 @ mymaster 127.0.0.1 6380

6189:X 21 Mar 2021 12:06:09.740 # -sdown slave 127.0.0.1:6379 127.0.0.1 6379 @ mymaster 127.0.0.1 6380

6189:X 21 Mar 2021 12:06:19.731 * +convert-to-slave slave 127.0.0.1:6379 127.0.0.1 6379 @ mymaster 127.0.0.1 6380

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

# Redis 集群

Redis 集群,数据自动在多个Redis节点间分片。

Redis集群提供的能力:

- 自动切分数据集到多个节点上。

- 当部分节点故障或不可达的情况下继续提供服务。

# 启动服务

[root@localhost redis]# /usr/local/bin/redis-server /opt/redis/cluster/redis_6379.conf

[root@localhost redis]# /usr/local/bin/redis-server /opt/redis/cluster/redis_6380.conf

[root@localhost redis]# /usr/local/bin/redis-server /opt/redis/cluster/redis_6381.conf

[root@localhost redis]# /usr/local/bin/redis-server /opt/redis/cluster/redis_6389.conf

[root@localhost redis]# /usr/local/bin/redis-server /opt/redis/cluster/redis_6390.conf

[root@localhost redis]# /usr/local/bin/redis-server /opt/redis/cluster/redis_6391.conf

[root@localhost redis]# ps -ef|grep redis

root 8545 1 0 13:39 ? 00:00:00 /usr/local/bin/redis-server *:6379 [cluster]

root 8550 1 0 13:39 ? 00:00:00 /usr/local/bin/redis-server *:6380 [cluster]

root 8567 1 0 13:40 ? 00:00:00 /usr/local/bin/redis-server *:6381 [cluster]

root 8572 1 0 13:40 ? 00:00:00 /usr/local/bin/redis-server *:6389 [cluster]

root 8577 1 0 13:40 ? 00:00:00 /usr/local/bin/redis-server *:6390 [cluster]

root 8582 1 0 13:40 ? 00:00:00 /usr/local/bin/redis-server *:6391 [cluster]

root 8672 2228 0 13:40 pts/0 00:00:00 grep --color=auto redis

# 创建集群,选项 --cluster-replicas 1 表示为每一个主服务器配一个从服务器

# ./redis-cli --cluster create ${IP}:${PORT} ${IP}:${PORT} ${IP}:${PORT} ${IP}:${PORT} ${IP}:${PORT} ${IP}:${PORT} --cluster-replicas 1

[root@localhost redis]# /usr/local/bin/redis-cli --cluster create 192.168.66.133:6379 192.168.66.133:6380 192.168.66.133:6381 192.168.66.133:6389 192.168.66.133:6390 192.168.66.133:6391 --cluster-replicas 1

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 192.168.66.133:6390 to 192.168.66.133:6379

Adding replica 192.168.66.133:6391 to 192.168.66.133:6380

Adding replica 192.168.66.133:6389 to 192.168.66.133:6381

>>> Trying to optimize slaves allocation for anti-affinity

[WARNING] Some slaves are in the same host as their master

M: b8a032204ae864920827c9825bc1df5c2bf19f8f 192.168.66.133:6379

slots:[0-5460] (5461 slots) master

M: a970ee79f6939aa6f15dbba55e7cfc32f4de193f 192.168.66.133:6380

slots:[5461-10922] (5462 slots) master

M: 79074d9fe1f4d59bc6e027c326112094ade7bf93 192.168.66.133:6381

slots:[10923-16383] (5461 slots) master

S: d656dba4a474c9c840c44ce90d8ef847b36fff47 192.168.66.133:6389

replicates b8a032204ae864920827c9825bc1df5c2bf19f8f

S: 2a54c3642936c5b19751d50664811df5f87696a3 192.168.66.133:6390

replicates a970ee79f6939aa6f15dbba55e7cfc32f4de193f

S: 78fee4677ca3aeba72e7432926f887c0e0417aa5 192.168.66.133:6391

replicates 79074d9fe1f4d59bc6e027c326112094ade7bf93

Can I set the above configuration? (type 'yes' to accept): yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

..

>>> Performing Cluster Check (using node 192.168.66.133:6379)

M: b8a032204ae864920827c9825bc1df5c2bf19f8f 192.168.66.133:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

S: d656dba4a474c9c840c44ce90d8ef847b36fff47 192.168.66.133:6389

slots: (0 slots) slave

replicates b8a032204ae864920827c9825bc1df5c2bf19f8f

M: a970ee79f6939aa6f15dbba55e7cfc32f4de193f 192.168.66.133:6380

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

M: 79074d9fe1f4d59bc6e027c326112094ade7bf93 192.168.66.133:6381

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: 78fee4677ca3aeba72e7432926f887c0e0417aa5 192.168.66.133:6391

slots: (0 slots) slave

replicates 79074d9fe1f4d59bc6e027c326112094ade7bf93

S: 2a54c3642936c5b19751d50664811df5f87696a3 192.168.66.133:6390

slots: (0 slots) slave

replicates a970ee79f6939aa6f15dbba55e7cfc32f4de193f

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

# 进入客户端,-c表示以集群方式启动

[root@localhost redis]# /usr/local/bin/redis-cli -p 6379 -c

# 测试

127.0.0.1:6379> set k1 v1

-> Redirected to slot [12706] located at 192.168.66.133:6381

OK

192.168.66.133:6381> set k2 v2

-> Redirected to slot [449] located at 192.168.66.133:6379

OK

192.168.66.133:6379> set k3 v3

OK

192.168.66.133:6379> set k4 v4

-> Redirected to slot [8455] located at 192.168.66.133:6380

OK

192.168.66.133:6380> set k5 v5

-> Redirected to slot [12582] located at 192.168.66.133:6381

OK

192.168.66.133:6381> set k6 v6

-> Redirected to slot [325] located at 192.168.66.133:6379

OK

192.168.66.133:6379> dbsize

(integer) 3

192.168.66.133:6379>

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

# Redis 应用

- 热点数据缓存

- 单点登录缓存用户信息

- 分布式锁

# Java 操作 Redis

Redis 出厂配置,默认只接受本机访问请求。

如果应用程序与 Redis 服务器不在同一台服务器,需要修改redis.conf。

- 将 bind 127.0.0.1 注释掉

- 将 protected-mode yes 改为 protected-mode no

vi /path/to/redis.conf

################################## NETWORK #####################################

# bind 127.0.0.1

protected-mode no

2

3

4

5

# Jedis

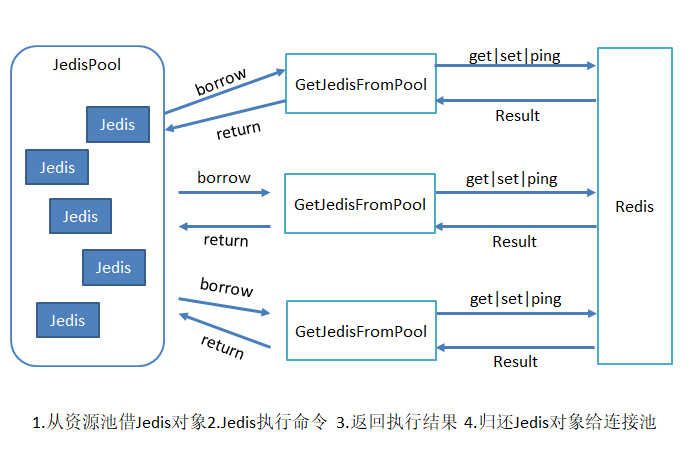

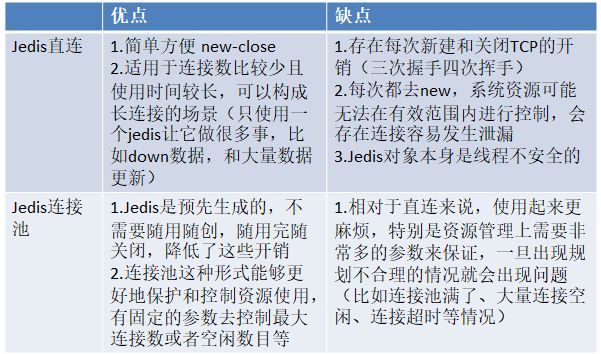

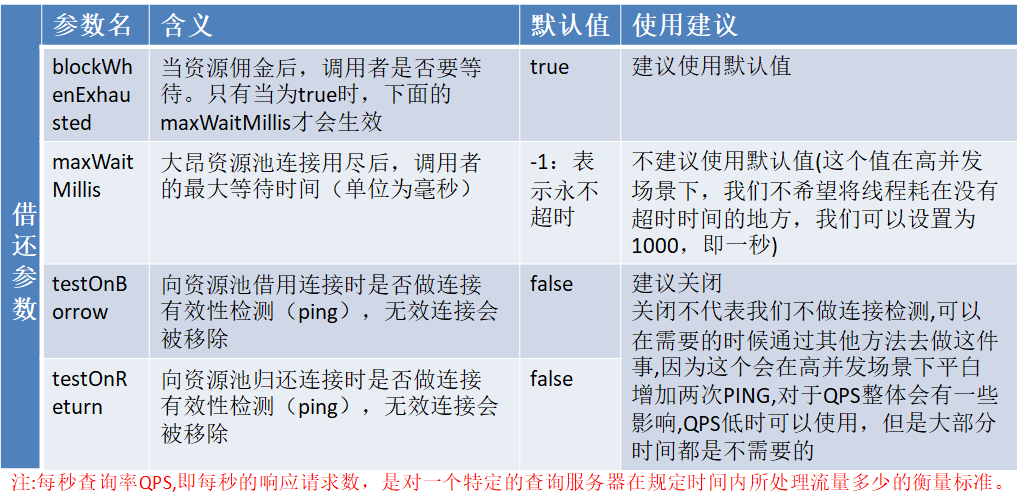

Jedis直连

Jedis连接池

两种方式比较

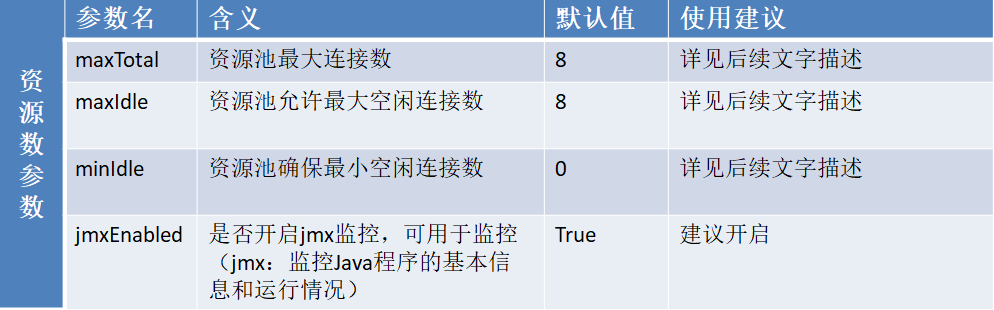

配置优化

<!-- https://mvnrepository.com/artifact/redis.clients/jedis -->

<dependency>

<groupId>redis.clients</groupId>

<artifactId>jedis</artifactId>

<version>3.5.2</version>

</dependency>

2

3

4

5

6

// 直连

Jedis jedis = new Jedis("192.168.66.133", 6379);

// 连接池

JedisPoolConfig jedisPoolConfig = new JedisPoolConfig();

jedisPoolConfig.setMaxTotal(10);

jedisPoolConfig.setMaxWaitMillis(1000);

JedisPool jedisPool = new JedisPool(jedisPoolConfig, "192.168.66.133", 6379);

Jedis jedis = null;

try {

jedis = jedisPool.getResource();

System.out.println(jedis.ping());

} catch (Exception e) {

e.printStackTrace();

} finally {

if (jedis != null) {

jedis.close();

}

}

jedisPool.close();

// 集群

Set<HostAndPort> nodes = new HashSet<>();

nodes.add(new HostAndPort("192.168.66.133", 6379));

nodes.add(new HostAndPort("192.168.66.133", 6380));

nodes.add(new HostAndPort("192.168.66.133", 6381));

JedisCluster jedisCluster = new JedisCluster(nodes);

jedisCluster.close();

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

# RedisTemplate

<dependencyManagement>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.3.5.RELEASE</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

</dependency>

<!-- Redis序列化 -->

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.75</version>

</dependency>

<!-- Redis要使用连接池,需要引入commons-pool2 -->

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-pool2</artifactId>

</dependency>

</dependencies>

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

# 自动配置类:RedisAutoConfiguration

# 配置属性:RedisProperties

spring:

redis:

# 单机配置

# host: 192.168.66.133

# port: 6379

# 集群配置

cluster:

nodes:

- 192.168.66.133:6379

- 192.168.66.133:6390

- 192.168.66.133:6391

# 单机或集群配置连接池,SpringBoot2.X采用Lettuce客户端连接Redis服务端,默认不使用连接池,只有配置以下属性时才使用连接池

lettuce:

shutdown-timeout: 100 # 关闭超时时间

pool:

max-active: 8 # 连接池最大连接数(使用负值表示没有限制)

max-idle: 8 # 连接池中的最大空闲连接

max-wait: 30 # 连接池最大阻塞等待时间(使用负值表示没有限制)

min-idle: 0 # 连接池中的最小空闲连接

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

@Configuration

public class RedisConfig {

@Bean

public RedisTemplate<String, Object> redisTemplate(RedisConnectionFactory redisConnectionFactory)

throws UnknownHostException {

RedisTemplate<String, Object> template = new RedisTemplate<>();

// key的序列化采用StringRedisSerializer

StringRedisSerializer stringRedisSerializer = new StringRedisSerializer();

template.setKeySerializer(stringRedisSerializer);

template.setHashKeySerializer(stringRedisSerializer);

// value的序列化采用fastJsonRedisSerializer

FastJsonRedisSerializer fastJsonRedisSerializer = new FastJsonRedisSerializer(Object.class);

template.setValueSerializer(fastJsonRedisSerializer);

template.setHashValueSerializer(fastJsonRedisSerializer);

template.setConnectionFactory(redisConnectionFactory);

return template;

}

@Bean

public StringRedisTemplate stringRedisTemplate(RedisConnectionFactory redisConnectionFactory)

throws UnknownHostException {

StringRedisTemplate template = new StringRedisTemplate();

// key的序列化采用StringRedisSerializer

StringRedisSerializer stringRedisSerializer = new StringRedisSerializer();

template.setKeySerializer(stringRedisSerializer);

// value的序列化采用fastJsonRedisSerializer

FastJsonRedisSerializer fastJsonRedisSerializer = new FastJsonRedisSerializer(Object.class);

template.setValueSerializer(fastJsonRedisSerializer);

template.setConnectionFactory(redisConnectionFactory);

return template;

}

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

// 使 @Autowired 等注解标记的类能实例化到IOC容器中,否则会报NullPointerExecption

@RunWith(SpringRunner.class)

@SpringBootTest

public class TestRedis {

@Autowired

private RedisTemplate redisTemplate;

@Test

public void test1() {

// 数据类型

// redisTemplate.opsForValue() 字符串

// redisTemplate.opsForList() 列表

// redisTemplate.opsForSet() 无序列表

// redisTemplate.opsForZSet() 有序列表

// redisTemplate.opsForHash() 哈希表

// redisTemplate.opsForHyperLogLog() HyperLogLog

// redisTemplate.opsForGeo() 地理位置

// key操作

// redisTemplate.keys(pattern)

// redisTemplate.hasKey(key)

// redisTemplate.delete(key)

// 事务操作

// redisTemplate.watch(key)

// redisTemplate.multi()

// redisTemplate.exec()

// redisTemplate.discard()

// redisTemplate.expire()

// 字符串

redisTemplate.opsForValue().set("k1", "v1");

redisTemplate.opsForValue().set("k2", "v2");

redisTemplate.opsForValue().set("k3", "v3");

redisTemplate.opsForValue().set("k4", "v4");

redisTemplate.opsForValue().set("k5", "v5");

redisTemplate.opsForValue().set("k6", "v6");

redisTemplate.opsForValue().set("k7", "v7");

redisTemplate.opsForValue().set("k8", "v8");

redisTemplate.opsForValue().set("k9", "v9");

}

}

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45